Google wanted to ship updates to NNAPI drivers in Android 12. The company planned to ship driver updates through GMSCore, also known as Google Play Services. Those plans were delayed until Android 13, and it is currently not clear when updatable drivers will first start rolling out or how they’ll be delivered. Here is what we know about Google’s plans.

Esper Device Management

What is NNAPI?

From natural language processing to object detection to smart replies, machine learning has a lot of applications on Android. Because machine learning is computationally intensive and phones have limited battery life, it’s important for ML workloads to either be run in the cloud or distributed across available on-device processors such as the CPU, GPU, DSP, or NPU. There are tens of thousands of Android device models on the market, though, each running its own fork of AOSP on a chipset from one of several silicon vendors. Thus, it’s not exactly straightforward for developers to know which operations are supported and which processors are available to distribute the workload to. To solve this problem, Google offers the Neural Networks API (NNAPI), an Android C API designed for running computationally intensive machine learning operations on Android devices.

NNAPI supports hardware-accelerated on-device inferencing on Android devices running Android 8.1 or higher. Developers first build and train their neural networks in a higher-level machine learning framework such as TensorFlow or Caffe. Then, they use an SDK offered by the silicon vendor (such as the Qualcomm Neural Processing SDK in the case of Snapdragon mobile platforms) to load and run their neural network with hardware acceleration support on the device. The SDK then calls the NNAPI, giving the model to the NNAPI runtime, a shared library that sits between an app and backend drivers. The NNAPI runtime distributes the computation workload across on-device processors for which specialized vendor drivers are available. Here is a diagram that shows the high-level system architecture for NNAPI.

Silicon vendors write Hardware Abstraction Layers (HALs) to abstract the various processors; these drivers must conform to the Neural Networks HAL specification so the Android framework can interface with the driver. The following diagram depicts the general flow of the interface between the Android framework and the vendor driver.

The plan to make NNAPI updatable

Although NNAPI simplified deploying machine learning models on Android, like some other Android APIs, it suffers from fragmentation. The NNAPI is updated alongside the Android OS framework, so the supported operations vary across OS versions. The NNAPI runtime also had updates tied to Android OS updates, making its behavior inconsistent across devices. The NN HAL and drivers, meanwhile, require support from silicon vendors to update and device makers to then integrate, so feature support may vary even across devices with the same chipset. Thus, developers couldn’t depend on NNAPI being consistent across Android versions.

To improve the consistency and performance of machine learning operations on Android, Google announced Android’s “updateable, fully integrated ML inference stack” at last year’s Google I/O. First, Google planned to bundle a TensorFlow Lite runtime with Play Services, so developers would no longer need to bundle it with their own apps. Second, Google moved the NNAPI runtime from the core OS framework to a modular system component in APEX format, allowing for updates to be delivered through Google Play. Finally, Google worked with Qualcomm and promised that updates to NNAPI drivers will be rolled out via Google Play Services on devices running Android 12. Qualcomm also committed to backporting new features for the commercial lifetime of a chipset and to delivering updates that would be backward compatible with older Snapdragon chipsets. The Android ML team seemed to have a plan to solve NNAPI fragmentation, but it seems that the exact mechanism of driver update delivery is still a work in progress.

Following Google’s announcement at I/O, the Android ML team submitted multiple patches to AOSP that reverted the code changes that enabled updatable NNAPI drivers. The commit description for one of these patches stated that the Android ML team had delayed their updatable platform driver plans to Android 13. The patches would have added a new shared library called libneuralnetworks_shim, which would have allowed the Android team to update the calls in the libneuralnetworks library by shimming them. These patches came mere days after Google and Qualcomm had announced that NNAPI driver updates would roll out to devices running Android 12, yet no public announcements of this delay to Android 13 were ever made.

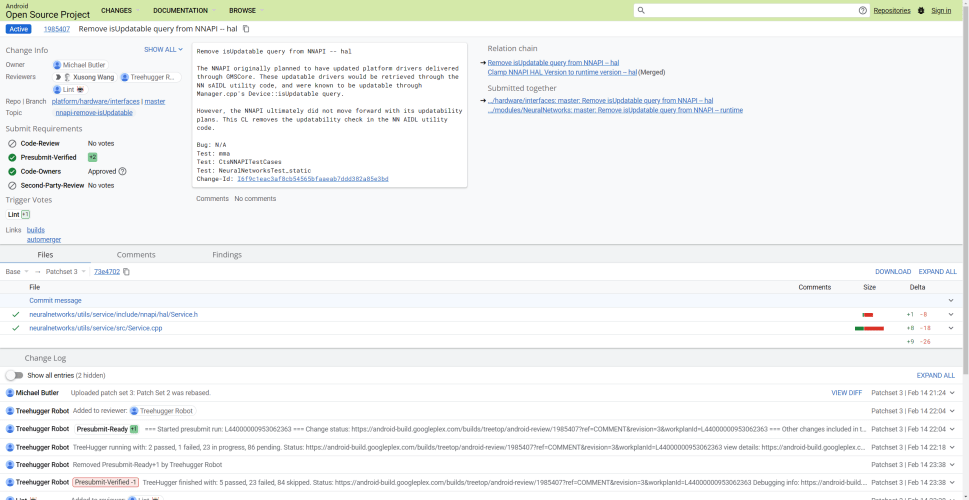

This week, however, the Android ML team submitted new patches that remove all remaining isUpdatable queries in the NNAPI runtime and HAL. The purpose of these patches, as explained in their commit descriptions, is to remove existing code related to NNAPI updatability as the Android ML team “ultimately did not move forward with its updatability plans.” The Android ML team “originally planned to have updated platform drivers delivered through GMSCore,” which refers to Google Play Services. This statement is in line with what Google and Qualcomm announced last year, so it seems the Android ML team has more work to do before they’re ready to ship updatable NNAPI drivers.

Code changes removing the isUpdatable query from the NNAPI runtime and NN HAL

However, Oli Gaymond, Product Lead on the Android ML team, has confirmed that Google still plans to ship updatable NNAPI drivers. “There have been some design changes,” he said on Twitter, adding that the team is “currently in testing.” When asked if updated NNAPI platform drivers will be delivered via GMSCore, he stated that “they will still be delivered via Play Services”, adding that Play Services “remains a key part of our distribution plan to give developers as consistent an experience as possible across OS versions and push updates faster.” Google currently delivers updates to the TensorFlow Lite runtime and NNAPI runtime via GMSCore, so this statement is consistent with how the company currently utilizes GMSCore for NNAPI updates.

It is unclear what, if any, architectural changes have been made to enable NNAPI driver updates, but we will monitor AOSP and developer documentation to find out.

I would like to thank developer Luca Stefani for informing me about the recent NNAPI code changes.

This article and its title were updated at 2:00 PM PT on 2/21/2022 to reflect additional information brought to light by the Product Lead on the Android ML team. It was updated again at 12:58 PM PT on 2/23/2022 to add confirmation that NNAPI driver updates will still be delivered via Play Services.

Esper Device Management

FAQ

.svg)