With the sheer number of new smartphones launching each year, it’s difficult for any single product launch to really up the ante. Hardware improvements are often incremental, because there’s just not enough lead time to engineer something truly groundbreaking when you have to launch a successor the next year. That’s why many feel that traditional smartphones are boring.

Esper Device Management

I disagree with that notion — I still think the rectangular glass slab is amazing! Every time I grow bored with phones, I remind myself to appreciate the engineering that goes into them. It’s incredible how many components can fit into such a tiny chassis, and every year, smartphone makers find new ways to cram more hardware into them.

Many of these components are physical sensors, such as accelerometers, gyroscopes, ambient light sensors, barometers, and proximity sensors, which are often packed together and sold as a single system-in-package to save space. The data these sensors collect underpin some of the most basic features of a smartphone, such as changing the UI orientation or toggling the speakerphone, but smartphone makers also use the data to power more advanced features. By fusing data from two or more physical sensors, device makers can create what’s called a composite sensor. Through clever combinations of physical sensors, advanced filtering and processing algorithms, and a dash of ingenuity, companies like Google have created innovative features that make many think, “a smartphone can do that?”

In this week’s edition of Android Dessert Bites, I’ll be covering some of these innovative, sensor-driven features (I already spoiled one of them in the title) as well as the hardware and software framework that make these features possible.

From “chop chop” to “drop, cover, and hold”

As a way to differentiate its early smartphones, Motorola experimented with gestures that sped up performing certain tasks. For example, the 2013 Moto X introduced a gesture to quickly launch the camera app by twisting the phone a few times, while an update to the 2014 Moto X introduced a gesture that toggles the flashlight by moving the phone in a chopping motion. The latter gesture — which became known as “chop chop” — even features prominently in Motorola’s marketing because of how popular it is. The Moto Actions app which provides these gestures (and more) has been installed on over 100 million Motorola devices, which means a huge number of people have had a chance to try these gestures.

Even though Motorola’s gestures aren’t all that special today, they paved the way for other smartphone makers to think of innovative ways to translate motion events into features. I can think of no better example than Google, which has developed not one, but two potentially lifesaving features that use basic sensors found on nearly all smartphones.

Using the accelerometer, Google can turn your Android phone into a mini seismometer to help detect earthquakes. If all it took was an accelerometer to detect earthquakes, then we’d have had mini seismometers in our pockets for over a decade now, but there’s obviously more to it. It’s not just the raw data that’s important but also how that data is being processed and used. Google analyzes aggregated accelerometer data from thousands of phones to determine if the shaking they’re experiencing is likely to be caused by the P wave or S wave of an earthquake. (For a more thorough explanation of Google’s early earthquake detection network, check out this article from The Verge.)

Detecting an earthquake this way makes sense, and Google isn’t even the first one to do it. But what should work and what actually works can be at odds, so to make the theoretical possible, you need the best data processing methods available. Google has no shortage of talented engineers who can come up with these algorithms or new ways to use sensor data.

With the launch of the Pixel 4 in 2019, Google launched the “Personal Safety” app with a feature that senses if you’ve been in a car crash and, if so, calls emergency services on your behalf. Using accelerometer data and audio picked up by the microphone, the feature can detect the telltale signs of a car crash.

The context behind the sensor hub

Google makes clever use of sensor data to provide the car crash detection feature, but in order for the feature to actually be useful, it needs to be able to detect car crashes at all times. After all, it wouldn’t be very helpful if your phone only sometimes called emergency services when you’re involved in a car crash!

So how is the Pixel able to always detect car crashes? By continuously polling the accelerometer and microphone and then processing that data to check if there’s been a car crash. I think you can see the potential problem here. Smartphones don’t have infinite power at their disposal — they can run only as long as the Li-ion battery in them has charge. Since the sensors have to be continuously polled for this feature to work, any optimizations have to happen in the data processing side of things. That’s where a sensor hub comes in.

A sensor hub, also known as a context hub, is a low power processor that is specially designed to process data from sensors. The duty of processing sensor data is offloaded to the sensor hub in lieu of the more power-hungry main applications processor (AP). The sensor hub can then wake the main AP to take action, either after every individual event or after many events are ready to be processed (ie. batching).

Motorola deployed a sensor hub in many of its devices to enable its various gestures without constantly waking the main AP. For example, the Moto G5 Plus appears to have the STM32L051T8 microcontroller, as revealed by developer Rafael Ristovski through his reverse engineering of the Motorola sensorhub firmware. This MCU has a single Arm Cortex-M0 core running at 32MHz, which requires far less power to operate than the octa-core Qualcomm Snapdragon 625 running at 2.0GHz.

Of course, Motorola isn’t the only company to use a sensor hub in its devices. Google, for instance, has made use of sensor hubs since the days of the Nexus 5X and 6P. Nowadays, you’ll find sensor hub hardware within the DSP block of most mobile SoCs. Sensor hubs became a standard part of Qualcomm’s chipset design starting with the Snapdragon 820, which introduced the Sensor Low Power Island (SLPI) to the Hexagon DSP. Since switching to its in-house silicon, Google smartphones have stopped using Qualcomm’s SLPI as the sensor hub and instead use what Google calls the “always-on compute” (AoC) island of the Tensor chip.

As you can see, the hardware that is the “sensor hub” won’t be the same in every device. Furthermore, since sensor hubs often feature very weak processors and have access to limited amounts of memory and storage, they can’t run a high-level operating system (HLOS) like Android. Instead, they often run a real-time operating system (RTOS) of some sort, which has a deterministic scheduler that guarantees that a task is executed within a strictly defined time. A RTOS is more suitable for an embedded system, but there’s one problem: there are a lot of different RTOSes out there.

There are some popular open source RTOSes like FreeRTOS and Zephyr, but there are also proprietary ones like Qualcomm’s QuRT. The existence of many different software environments makes cross-platform development challenging, so to standardize the software platform on sensor hubs, Google developed the Context Hub Runtime Environment (CHRE).

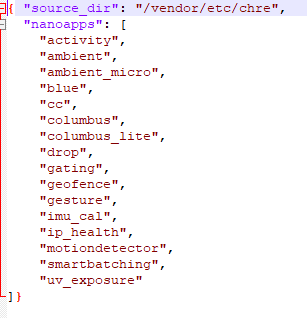

CHRE: A standardized framework for running nanoapps

The CHRE is a framework that sits above the RTOS of the embedded controller. It provides an API that defines the software interface between small native applications written in C or C++, called nanoapps, and the system. These nanoapps can process sensor data much more frequently and efficiently than an Android app can while the device’s screen is off. And because the CHRE API is standardized across all CHRE implementations, nanoapps that are written to that API are code compatible across devices. This means that a nanoapp’s source code doesn’t need to be changed to run on a different sensor hub, though the nanoapp may need to be recompiled.

Nanoapps integrate with a component on the Android side, called the nanoapp’s client, to enable the feature that’s being targeted. However, only system-trusted Android apps can interact with nanoapps. This is because the Android app either has to hold a privileged permission (ACCESS_CONTEXT_HUB) or be signed by the same digital signature used to sign the nanoapp. Thus, only the device maker is able to create a nanoapp, meaning that third-party developers have few options when it comes to taking advantage of the sensor hub. However, device makers can create nanoapps that power APIs available for use by third-party apps. Nanoapps on Pixel devices are used to power Google Play Services’ activity recognition and location providers, for example.

The Android framework interfaces with the CHRE through the Context Hub hardware abstraction layer (HAL), which defines the APIs for listing available context hubs and their nanoapps, interacting with those nanoapps, and loading and unloading those nanoapps. Very few device makers have implemented Android’s reference platform, with only Pixel phones and a handful of LG phones declaring support.

It’s unfortunate, but it makes sense why so few device makers have implemented support for CHRE. After all, if only OEMs and their trusted partners can create nanoapps, what’s the point of implementing the CHRE when the silicon vendor already provides robust SDKs?

Google, being Android’s standard-bearer, of course chose to implement full support for CHRE on their own Pixel devices. They’ve implemented nanoapps for a range of Pixel-exclusive features, including the aforementioned car crash detection, the Pixel 4’s Soli radar gestures, and the Quick Tap gesture.

Google clearly believes in the future of CHRE, as they’ve pushed to include it as Zephyr’s sensor and message-bus framework. They also recently added FreeRTOS support to CHRE, so they’re not just supporting the RTOS that they prefer for their own embedded controller designs.

Having seen what’s possible with sensor hubs, I’m excited for what’s to come. I can imagine sleep tracking consuming orders of magnitude less power. I can see Wear OS wearables finally having multi-day battery life while enabling all sorts of continuous health monitoring and fitness tracking features. All sorts of new and innovative features are possible — we just have to wait for those with a bit of ingenuity to come up with them.

Thanks for reading this week’s edition of Android Dessert Bites. Although CHRE has been around since Android 7.0 Nougat, there are surprisingly few high-level articles about it. Google’s developer documentation is the best technical resource I could find on it, so I hope this article helped a few of you understand the importance of sensor hubs and what they’re used for.

.svg)